Backward Induction

Last Updated on 28. April 2023 by Martin Schuster

Backward induction is a game-theoretic concept that can be applied to sequential or repeated games. The aim is to find games that are subgame perfect. This concept and related terms are explained best by an example.

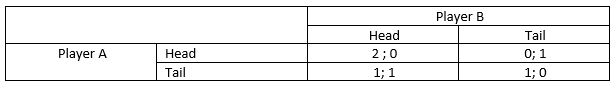

Suppose we have two players. They play a game where player A first puts a coin on the table, and then B puts a coin on the table. B can see what side of A’s coin is up. The pay-off matrix shows the pay-offs for all possible combinations.

If both coins display the Head, player A wins 2, and B receives nothing. If A’s coin shows Head and B’s coin shows Tail, A wins nothing, and B receives 1.

If A’s coin shows Tail and B’s coin shows Head, A wins 1 and B wins 1. If A’s coin shows Tail and B’s coin shows Tail, A wins 1, and B receives 0.

In a single shot simultaneous game, there is no Nash equilibrium. But player A would favor [Head, Head] since it promises the highest reward. To see if this is plausible, we can use a different form than the pay-off matrix to display the game – the extensive form.

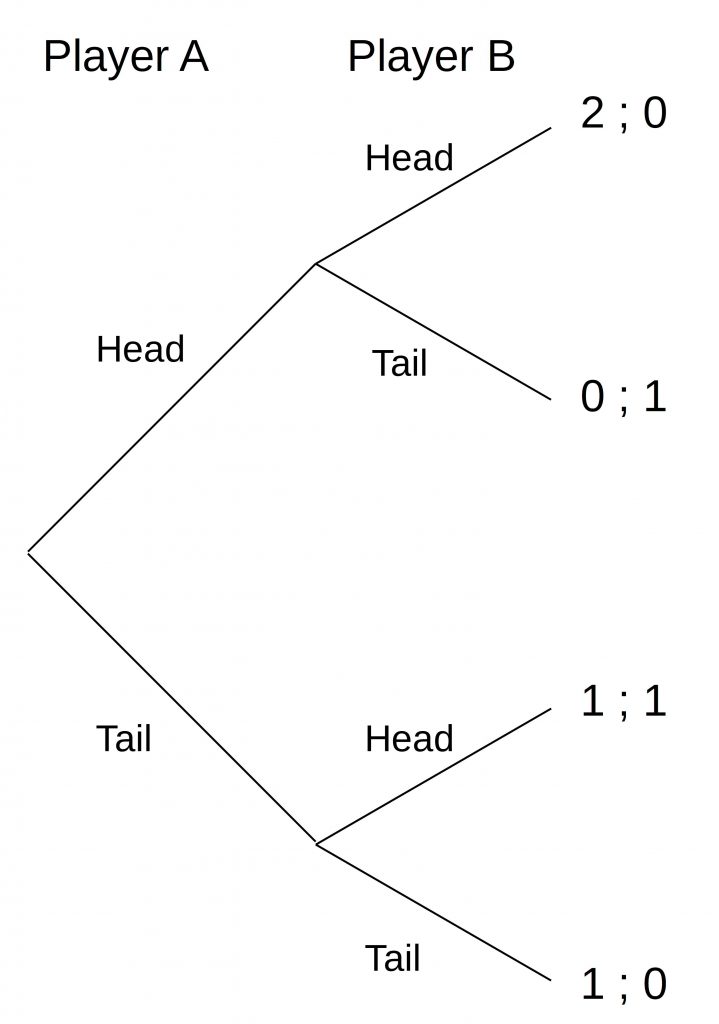

This game tree shows the pay-offs for player A and B according to their strategies. The game tree consists of two subgames. One subgame is the decision of player A and the other subgame is the decision of player B. A subgame starts with a decision node and ends with a decision node.

With this decision tree, we can go backward from player B’s decisions.

In the upper branch, the best strategy for player B is to choose “Tail” since it yields a pay-off of 1 compared to 0 when playing “Head”.

In the lower branch, the best strategy for player B is to choose “Head” since it yields a pay-off of 1 compared to 0 when playing “Tail”.

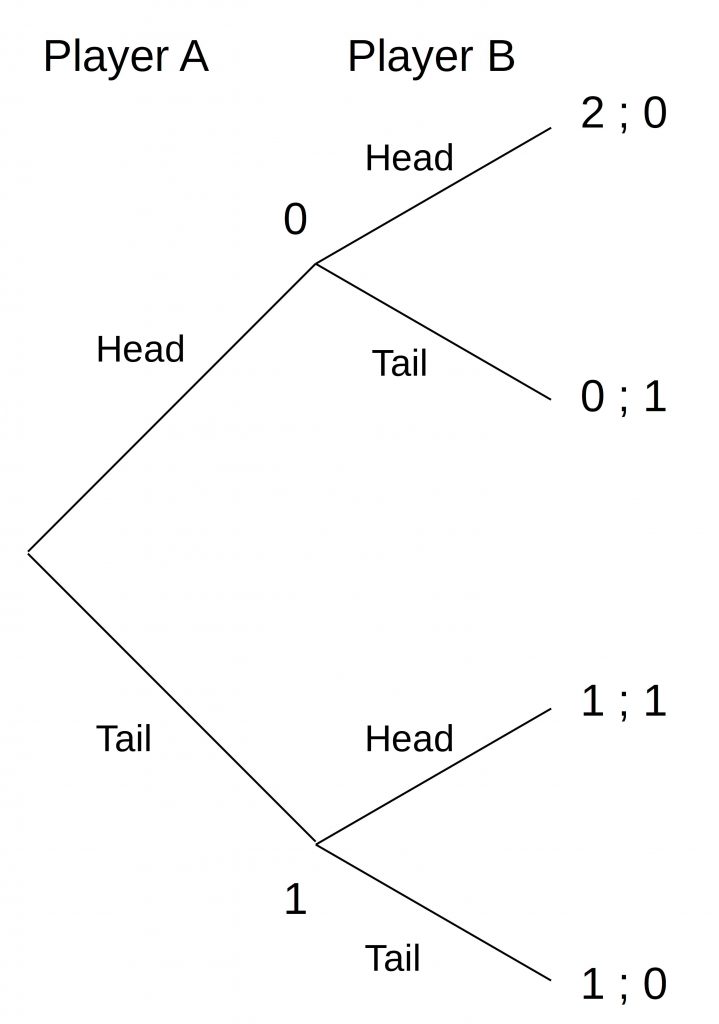

The following figure displays the expected pay-offs for A.

You can see that the branch “Head” promises a pay-off of 0 and branch “Tail” a pay-off of 1. Hence, rational player A chooses Tail.

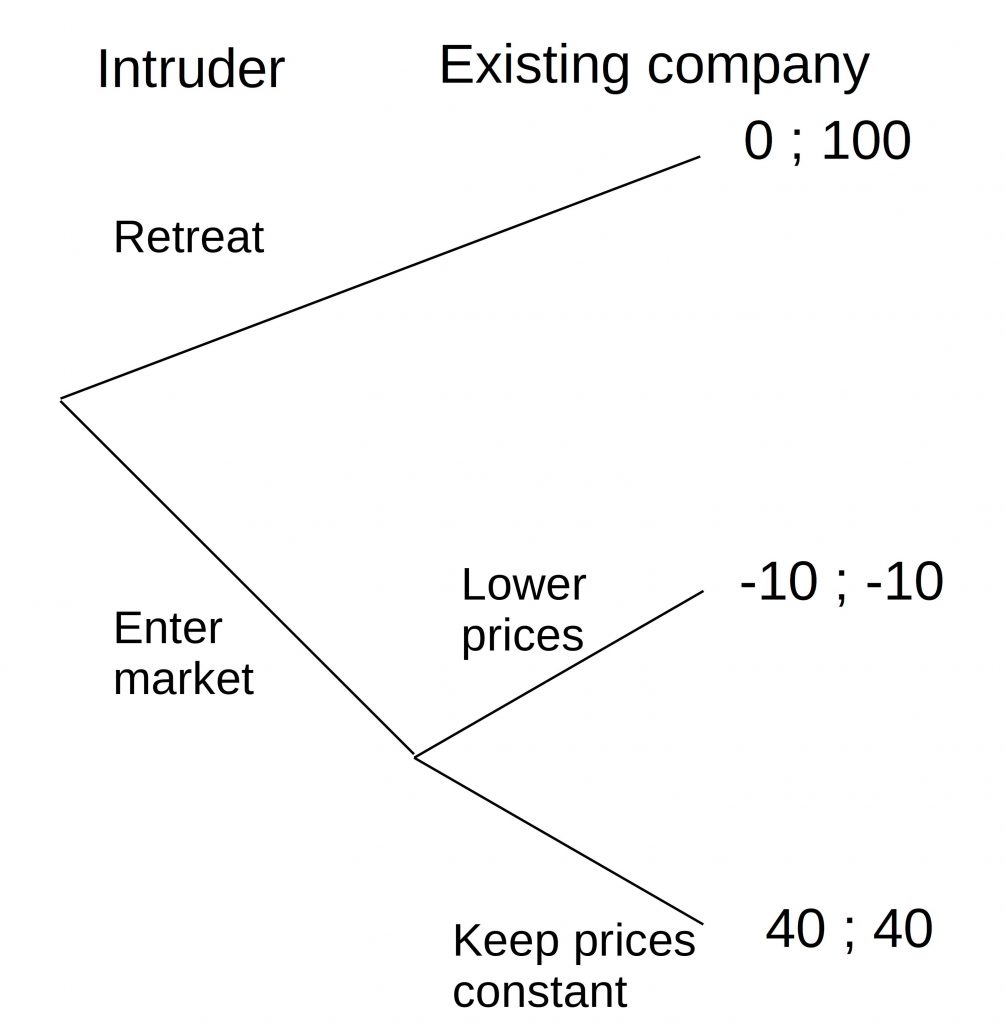

Backward induction can be used to evaluate threats and promises. To demonstrate this, we use another example.

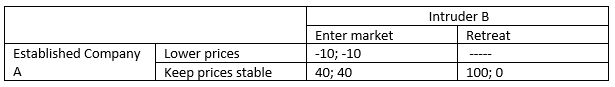

Imagine we have two companies A and B. One is already present in a city. Another wants to enter this market. The existing company A threads the intruder B to lower the prices and wage a price war if B really enters its market. In this case, both suffer a loss of -10. If the existing company keeps the price stable and the intruder enters the market, both make a profit of 40 each. If the intruder retreats, the existing company makes a profit of 100.

Let’s consider the pay-off matrix again.

The question is, is the threat of lowering the prices by the existing company credible? Let’s have a look at the decision tree.

In case of a market entry, the existing company A would choose to keep the prices constant because it promises a profit of 40 instead of a loss of -10. This would yield a pay-off of 40 to the intruder. Compared to the profit of 0 in case of a retreat, this is favorable. As a consequence, the intruder enters the market, and the existing company keeps the prices constant. Hence, the thread is not plausible.

Of course, this example is not perfect, and it is likely that companies will follow another rationale. This game is only a one-shot game. The players cannot change their decision in the future. In reality, the existing company might have more money and could wage the price war until the intruder gets bankrupt and leaves the market forever, yielding high profits in the future that set off the loss in the price wartime.

Subgame Perfectness

A sequential (or repeated) game is subgame perfect if all subgames (strategies) follow a rational decision. It doesn’t mean that the overall outcome is optimal.

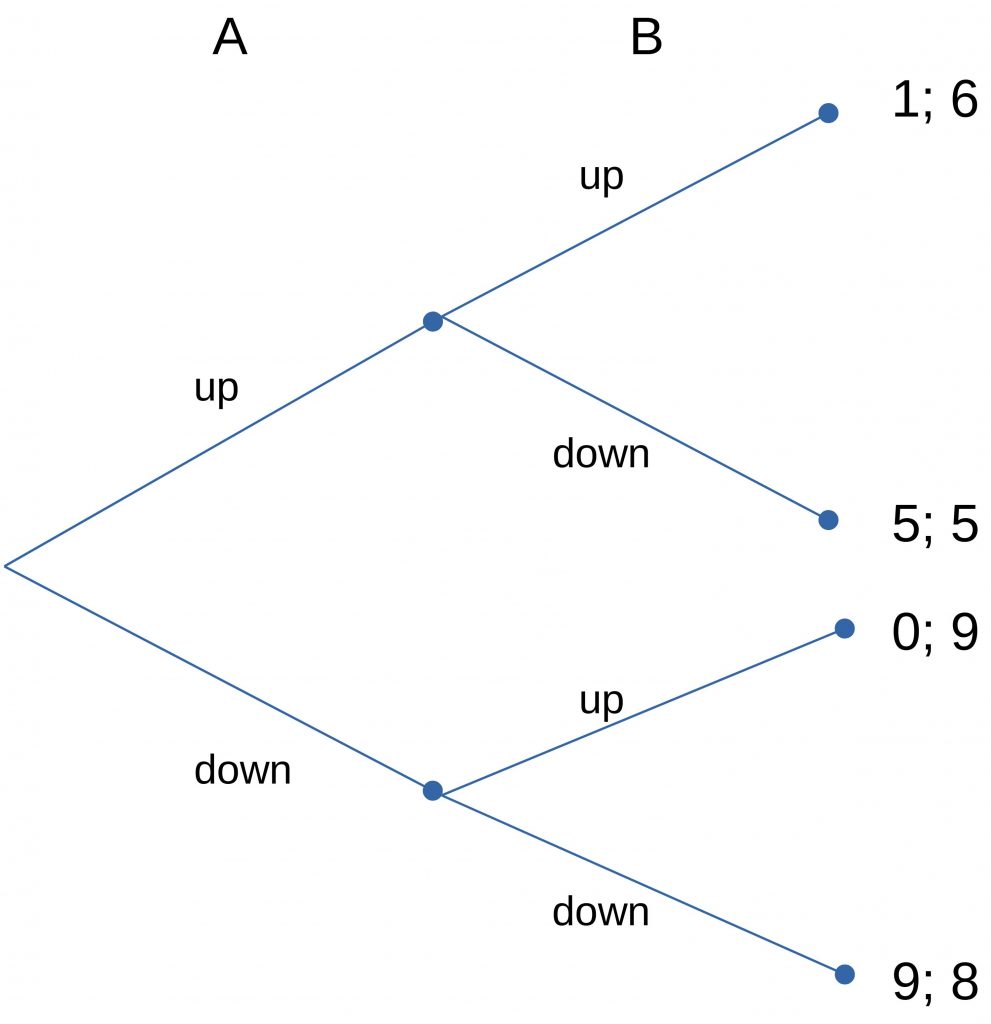

Suppose we have the following decision tree:

We have two subgames. The first is the one of player B, and the second one is the subgame of player A.

If A plays “up” B’s rational decision would be to play “up” too because it promises a pay-off of 6 instead of 5. This would leave A with an expected pay-off of 1.

If A plays “down” B’s rational decision would be to play “up” because it promises a pay-off of 9 instead of 8 when playing “down”. This would leave A with an expected pay-off of 0.

So, rationally, A can choose between playing “up” with a pay-off of 1 and “down” with a pay-off of 0. If A chooses “up” because it brings him the higher pay-off, the game is subgame perfect.

A Pirate Example

Suppose a group of pirates wants to divide their loot of 100 coins. The wildest pirate makes a suggestion who gets what share. If 50% or more vote for the suggestion, it is accepted. If less than 50% of the pirates vote for the suggestion, the suggesting pirate is thrown overboard, and the next least wild pirate has to make a suggestion.

We have a few assumptions:

- Pirates act rationally.

- Nobody wants to go overboard.

- There is an order of how wild pirates are. There are no two pirates that are equally wild.

- The suggesting pirate can vote too.

- Pirates that went overboard cannot vote and receive no share of the loot.

- Pirate N is wildest, pirate 1 is the least wild.

- Coins cannot be divided.

Let’s consider a few cases:

Two pirates

P2 suggests: P2 receives 100 coins, P1 receives 0.

P2 votes for the suggestion, P1 votes against it. 50% of the votes voted for the suggestion hence it is accepted.

Three pirates

If the suggestion is rejected, we will continue with two pirates. Then, P2 would receive 100 coins, P1 0 coins.

Hence, P1 accepts every suggestion that promises him more than 0 coins.

P3 suggests P3 receives 99 coins, P2 receives 0 coins, P1 receives 1 coin.

66.67% vote for the suggestion.

Four pirates

If the suggestion is rejected, P2 receives 0 coins. Hence, P4 has to bribe P2.

P4 suggests that P4 receives 99 coins, P3 receives 0 coins, P2 receives 1 coin, P1 receives 0 coins.

50% vote for the suggestion.

Five pirates

If the suggestion is rejected, we continue with four pirates, pirates P3 and P1 receive 0 coins. Therefore, P5 must bribe them. P5 suggests P5 receives 98 coins, P4 receives 0 coins, P3 receives 1 coin, P2 receives 0 coins, P1 receives 1 coin.

Three pirates out of five (60%) vote for the suggestion.

Six to 200 pirates

This can continue until 200 pirates. Then it becomes tricky.

201 pirates

P201 has not enough coins to bribe enough pirates and keep something for himself. Hence, he needs to give away everything.

He gives 100 pirates 1 coin each and keeps 0.

101 out of 200 votes support this suggestion. P201 remains on board but without profit

202 pirates

P202 has to bribe all pirates that would get nothing under P201. He gives away 100 coins and keeps 0 coins.

101 out of 202 votes support the suggestion, exactly 50%. P202 remains on board.

203 pirates

P203 would have to bribe all those pirates that would receive nothing under P202. But 100 coins are not enough to gain the majority. He would need 102 votes.

Hence, he goes overboard for sure.

204 pirates

P204 has not enough coins to bribe the majority. But does he go overboard? No. P203 doesn’t want to be the next pirate that has to make a suggestion. Hence he will support P204’s suggestion. P204 has to bribe 100 of the first 200 pirates.

He receives 102 votes and stays on board.

205 pirates

P205 cannot count on the votes of P204 and P203. The 100 coins are not enough to bribe the majority. He is unlucky and goes overboard. But here, it becomes tricky. Because neither P204 nor P203 would benefit from letting P205 go overboard (they have to give away also all their coins), they could also vote for his suggestion.

A Cinematic Example

The following movie snippet shows the essence of backward induction and the credibility of threads.

Note: This clip is from “Dr. Strangelove or: How I Learned to Stop Worrying and Love the Bomb,” directed by Stanley Kubrick in 1964. (Length 4:22 min)

Limitations of Backward Induction

In reality, the observed behavior deviates from the expected Nash equilibrium. Reasons are manyfold:

- Fairness

- Altruistic punishment: People punish unfair behavior even if this is costly.

- The game has no determined end

- Different pay-offs than assumed

- Previous experiences

Register

Register Sign in

Sign in