Prompt engineering vs. classic NLP techniques: How do you build a good educational chatbot?

Artificial intelligence is changing the world of education. Especially chatbots offer enormous potential: they answer individual questions, help with understanding tasks and are available at any time to support the learning process. However, for a chatbot to be really helpful, it must be able to understand user queries correctly and provide precise answers – a challenge that is often underestimated.

Classic techniques from the field of natural language processing (NLP) have long been used for this purpose. NLP is concerned with enabling computers to understand, process and generate human language. These techniques primarily include the fine-tuning of models (e.g. the BERT model for question answering), but also the use of more traditional algorithms such as logistic regression. However, these methods must be specially trained and adapted for the task at hand. This is often costly and time-consuming.

In recent years, however, a new approach has become established: Prompt Engineering. Instead of laboriously adapting and training models, already trained Large Language Models (LLMs) are used – these include LLaMA or Mistral. Such models are adapted directly to tasks using specifically formulated prompts. This makes it easy to control the desired behavior in the model. This process saves time, costs and increases flexibility.

Whether Prompt Engineering is actually superior to traditional NLP techniques was tested specifically using the Blockchain-Chatbot (in a previous state) of the BCAM. The aim was to systematically compare both approaches and find out which one is better suited to various tasks in the education sector.

The Python programming language is ideally suited for the implementation and evaluation of both approaches, thanks to its libraries such as Hugging Face Transformers, SpaCy and Scikit-learn.

Five typical NLP tasks were tested that cover the task areas of a chatbot:

- Named Entity Recognition (NER)

- Sentiment Analysis (SA)

- Question Answering (QA)

- Text Classification (TC)

- Text Summarization (TS)

Methodology

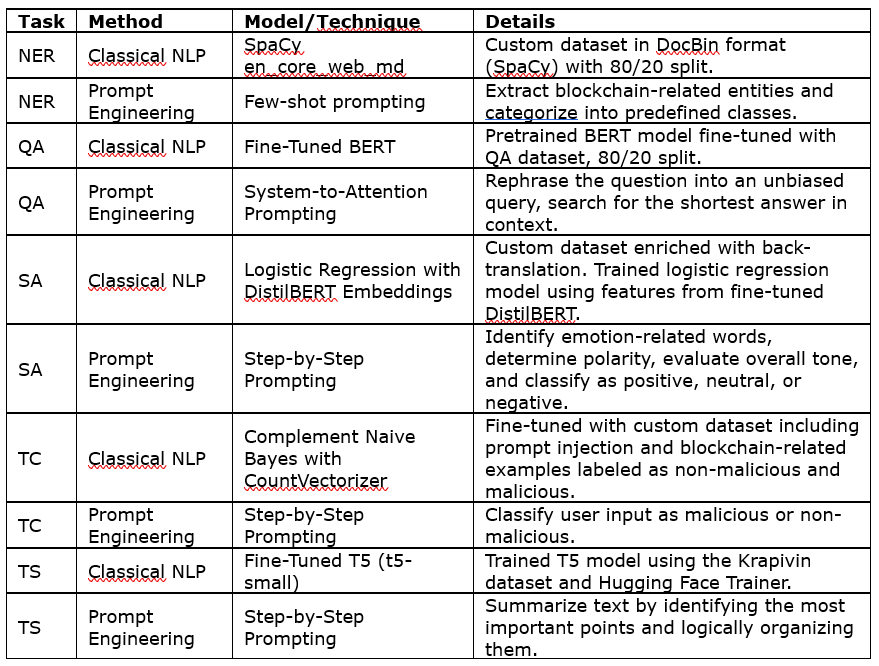

For the classical NLP techniques approach, separate models were trained for each task using proven methods. For Prompt Engineering, specific prompts were developed for each task and passed directly to an LLM. Three LLMs were tested – Llama3.1:70b, Mixtral:8x7b and Gemma2:27b – to make the results more robust. The techniques used for both approaches are summarized in Figure 1.

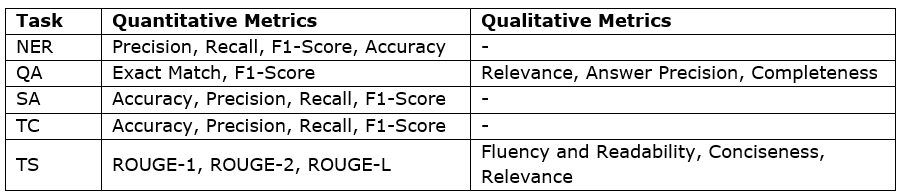

In addition, a RAG system with blockchain texts was used for Prompt Engineering in order to provide the models with relevant context. Separate test sets were created for each task, with which both the classic NLP techniques and the Prompt Engineering approaches were systematically tested. The comparison was mainly quantitative using standard metrics such as accuracy and F1 score; for more complex tasks such as QA and TS, a qualitative evaluation was also carried out (Figure 2).

Results

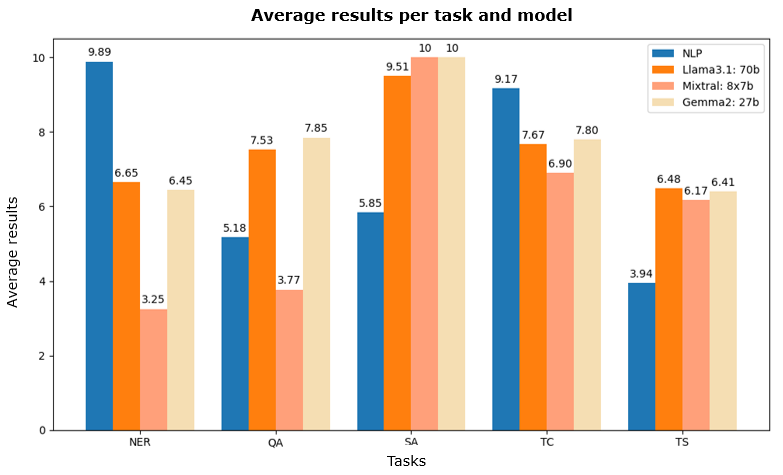

The tests (see Figure 3) show a clear picture: Prompt Engineering performed significantly better in tasks such as sentiment analysis and text summarization. The strength of LLMs is particularly evident in the recognition of emotions and the comprehensible summarization of texts – flexible, nuanced and context-related.

Classic NLP techniques, on the other hand, were more convincing in structured tasks such as Named Entity Recognition (NER) and text classification. The accuracy and reliability of specialized, finely tuned models that can recognize clear patterns and implement rules precisely have proven their worth here.

The mixed picture for Question Answering was interesting: while Llama3.1:70b and Gemma2:27b performed better in the prompt approach, Mixtral:8x7b showed weaknesses. This indicates that the performance strongly depends on model architecture and size.

Summary

- Prompt Engineering is convincing in conversational and interpretative tasks.

- Classic NLP models remain superior for tasks that require strict structure and precision.

Prompt engineering brings tangible benefits: It enables more natural conversations, quick adaptations to new topics and saves development effort – perfect for chatbots in the dynamic education sector.

Nevertheless, classic NLP techniques still have their place: they can make chatbots more robust and secure – for example, by recognizing harmful or malicious requests through text classification, or by enabling targeted database queries through entity recognition.

The best solution? A combination of both worlds: flexible context flow through prompt engineering, supplemented by the precise structure and security of classic NLP models.

Register

Register Sign in

Sign in